Modelling and simulating the human heart, lungs, or brain: For such tasks, the open-source software package deal.II is highly recommended. Based on the C++ programming language, it comprises around 600,000 lines of code and provides many mathematical tools for developing innovative solvers for partial differential equations. These, in turn, form the basis for calculating fluid and gas flows or modelling the deformation of solid bodies – fundamental requirements for digitally representing and simulating human organs. Martin Kronbichler, a professor of mathematics at Ruhr University Bochum, co-developed this software library and currently leads a European interdisciplinary project. Along with 12 other universities and research institutions, including the Leibniz Supercomputing Centre (LRZ), the goal is to adapt deal.II and its associated codes and applications to the next generation of heterogeneous Exascale-level supercomputers. “Whenever a new computer system arrives, it means a lot of work – especially adapting the code,” says Kronbichler. “It’s an exciting time when we, as mathematicians, can re-evaluate decisions made 10 or 15 years ago and ask whether they still lead to efficient execution on current hardware – or whether we need to take entirely new directions.”

deal.II provides the shared mathematical tools for differential equations that allow different project teams to adapt existing programs and algorithms or build additional application-specific models. The researchers are particularly focused on tools for organ modeling and flow simulation. Based on deal.II, a new Exascale framework called "dealii-X" is being developed, specialized to create digital twins of the human body. The first project phase focuses on the algorithms that control the programme sequence, such as the work steps or data transfer. “On the software side, we can continue using many features – about 80 to 90 percent of the code will still run on CPUs,” explains Kronbichler. “The remaining 10 to 20 percent still leaves plenty of code and work – but it's where we can achieve the greatest speedup on GPU.”

To accelerate results, heterogeneous supercomputers rely on a combination of Central Processing Units (CPUs) and Graphics Processing Units (GPUs), often with additional accelerators. Their interaction must be re-orchestrated to enable more sustainable and efficient computing: “Most simulations rely on a variety of mathematical components, but they typically result in linear and nonlinear systems of equations – and those are ideal for GPUs,” says Kronbichler. GPUs are optimised for fast computation and data processing, and they use less power than CPUs, which also handle control functions. “deal.II contains many algorithms that we can reformulate to rebalance computations and data access from processor cores. Usually, we reduce data access by performing redundant calculations.” Data transfer accounts for a significant portion of energy consumption in supercomputing. To reduce this, the team relies on matrix-free operators in Krylov subspace and multi-grid methods that replace traditional data structures.

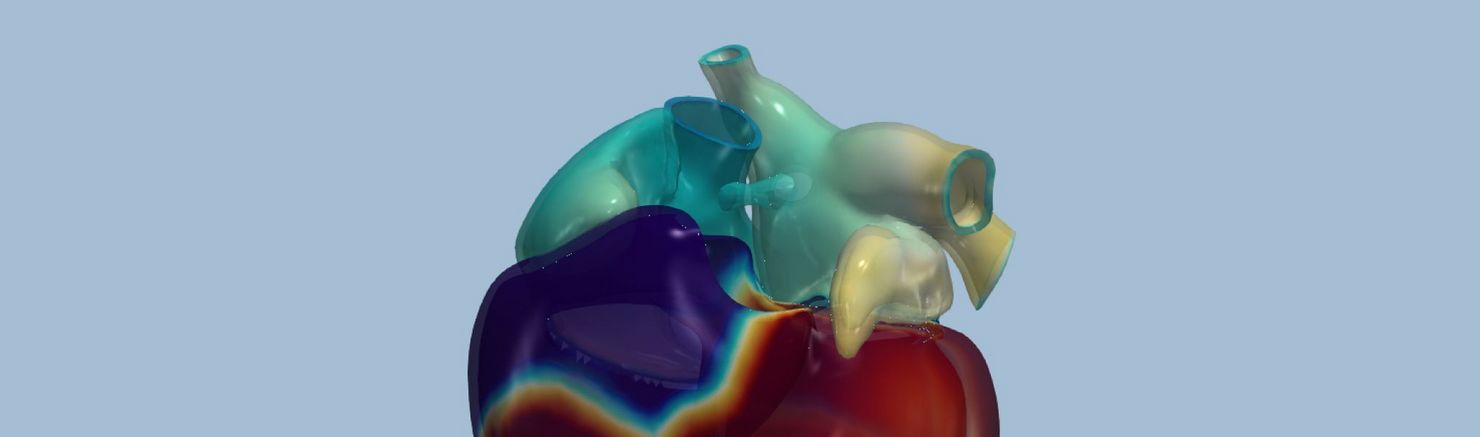

In general, as computational power increases, so do researchers’ expectations: They want to incorporate and model more parameters in their simulations. Application specialists in the interdisciplinary dealii-X project are already optimising programs to simulate organs and bodily functions in greater detail. dealii-X includes simulation tools for the brain, lungs, blood vessels, and liver. The project team includes not only computer scientists, mathematicians, and software engineers, but also biomechanics experts. “On one hand, we're adapting code to new technologies; on the other, stronger computers now allow us to model processes that were previously impossible to calculate,” Kronbichler explains. Existing simulations should also be executed more quickly: “With each generation of supercomputers, it becomes harder to fully harness and leverage their technical potential.” To better understand the increasingly complex development of code and algorithms, all adaptations and new developments are tested on the heterogeneous supercomputers at LRZ. These tests produce performance benchmarks that guide further adjustments, allthough the gained experiences help to optimize high-performance computers (HPC) for specific software and efficiency – by, for example, adjusting clock rates.

Ultimately, dealii-X isn’t only about supercomputing: some research groups are also working on methods and models for Artificial Intelligence. Statistical computations can enhance mathematical-physical models, and AI models can be trained using simulation results to quickly generate additional scenarios. “The complexity of the underlying mathematical models has so far prevented simulation knowledge from being transferred into clinical practice,” Kronbichler notes. By the end of 2026, dealii-X aims to deliver tools that allow the results of mathematical-physical models—or their digital organ twins—to be integrated into software for hospitals and clinics. Digital hearts, lungs, or blood vessels could then be personalised with patient data to support therapies. (vs)